Lecture 15: Statistics

- Random Variables and Expectation

- Linearity of Expectation

- Expected Time to Success

- Standard Deviation and Variance

Random Variables and Expectation

Definition

An (integer-valued) random variable X is a function from Ω to Z. In other words, it associates a number value with every outcome.

Random variables are often denoted by X,Y,Z,…

We extend arithmetic to random variables in the natural way.

Definition

Given random variable $X : Ω → Z$, random variable $Y : Ω → Z$ and integer k, we can combine X,Y and k to obtain the following functions on all $ω ∈ Ω$:

Addition of variables:

Multiplication of variables:

Scalar addition:

Scalar multiplication:

Example

Random variable X: value of rolling one die

Ω={1,2,3,4,5,6}

X(i) = i

Example

Random variable $X_s$: sum of rolling two dice

Ω={(1,1),(1,2),…,(6,6)}

Question

Is $X_s = X +X$?

No! X_s 是两个独立随机变量的和,每次扔色子的结果是独立的。因此应该看下面这个等式。从写法上,X+X是自身相加,是相关的,因为两个X是同一个骰子自身的结果。

where $X : ω_1 → ω_1$ and $Y :ω_2 → ω_2$ are independent and identically distributed (i.i.d)

Expectation

Definition

The expected value (often called “expectation” or “average”) of a random variable X is

Take Notice

Expectation is a truly universal concept; it is the basis of all decision making, of estimating gains and losses, in all actions under risk. Historically, a rudimentary concept of expected value arose long before the notion of probability.

Examples: dice

The expected value when rolling one die is:

The expected sum when rolling two dice is

Examples: dice

Question. Buy one lottery ticket for $1. The only prize is $1M. Each ticket has probability $6 · 10^{−7}$ of winning. What is the expected value of the lottery ticket?

Answer

There are two types of ticket, winning tickets and losing tickets, so we let Ω = {win,lose}, and $X_L : Ω → \mathbb{Z}$ such that $X_L(win) = $999,999$ and $X_L(lose)= −$1$ Then

Linearity of Expectation

Theorem (linearity of expected value)

For any random variables X,Y and integer k:

Example 1

The expected sum when rolling two dice can be computed as

Example 2

Question. Calculate $E(S_n)$, where

Answer 1. (the ‘hard way’)

Using the definition of the expectation, we have

Since there are $\binom{n}{k}$ sequences of n coin tosses with k heads, and each sequence has the probability $\frac{1}{2^n}$, this gives

where we used the ‘binomial identity’ $\sum^{n}_{k=0}=2^n$ to simplify.

Answer 2. (the ‘easy way’)

When n =1, we have that $S_1$ : {tails,heads} → {0,1} denotes the number of heads obtained in one coin toss.

For general n > 1, we can think of $Sn$ as being equivalent to repeating a single $S_1$ coin toss, n distinct (and independent) times. Let $S{1_i}$ denote coin toss number i in this sequence. Then

Observations

Fact

If $X_1,X_2,…,X_n$ are independent, identically distributed (i.i.d) random variables, then $E(X_1+X_2+…+X_n) = E(nX_1) = nE(X_1)$.

Take Notice

$X_1 +X_2+…+X_n$ and $nX_1$ are very different random variables.

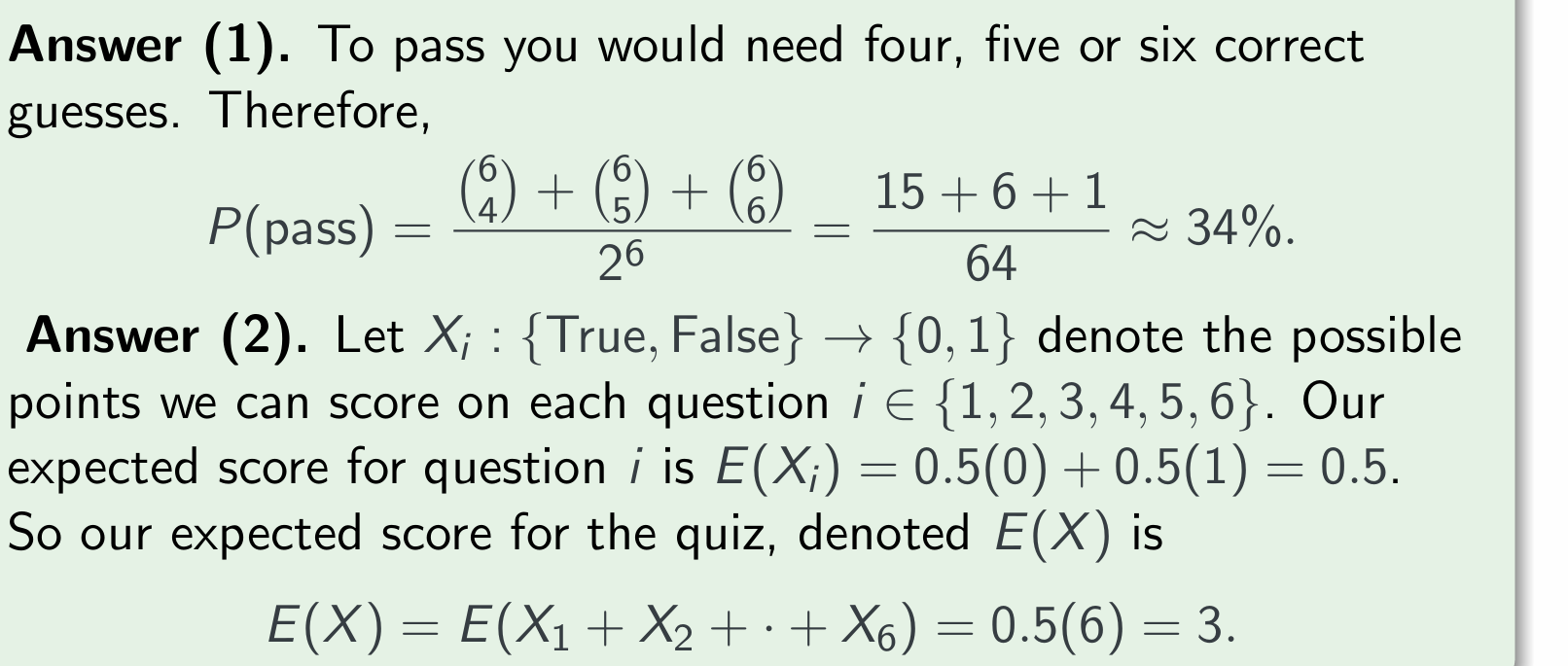

Exercises 1

Question.You face a quiz consisting of six true/false questions, and your plan is to guess the answer to each question (randomly, with probability 0.5 of being right). There are nonegative marks, and answering four or more questions correctly suffices to pass.

What is the probability of passing?

What is the expected score?

Exercises 2

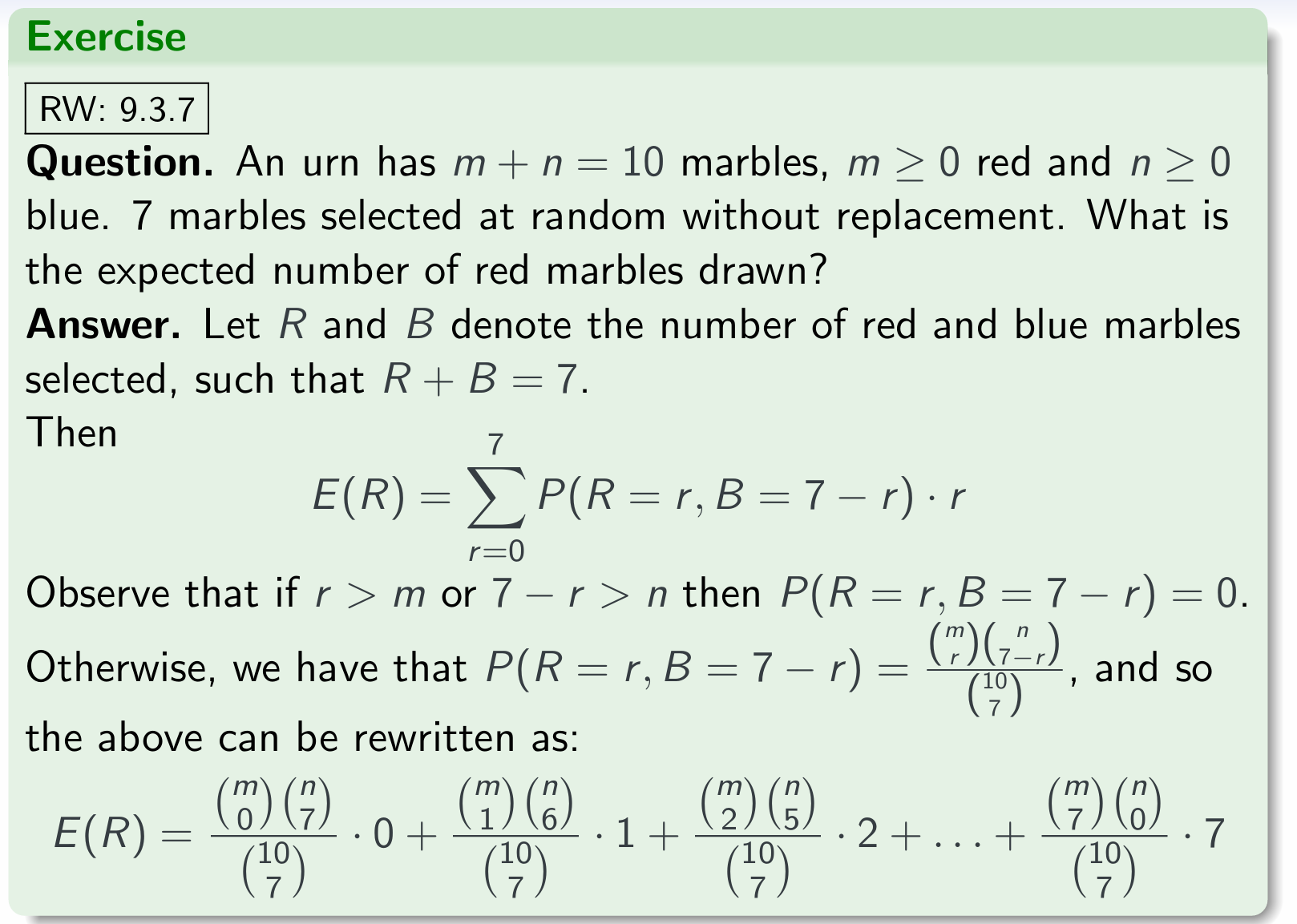

Question. An urn has $m+n=10$ marbles, m≥0 red and n≥0 blue. 7 marbles selected at random without replacement. What is the expected numberof red marbles drawn?

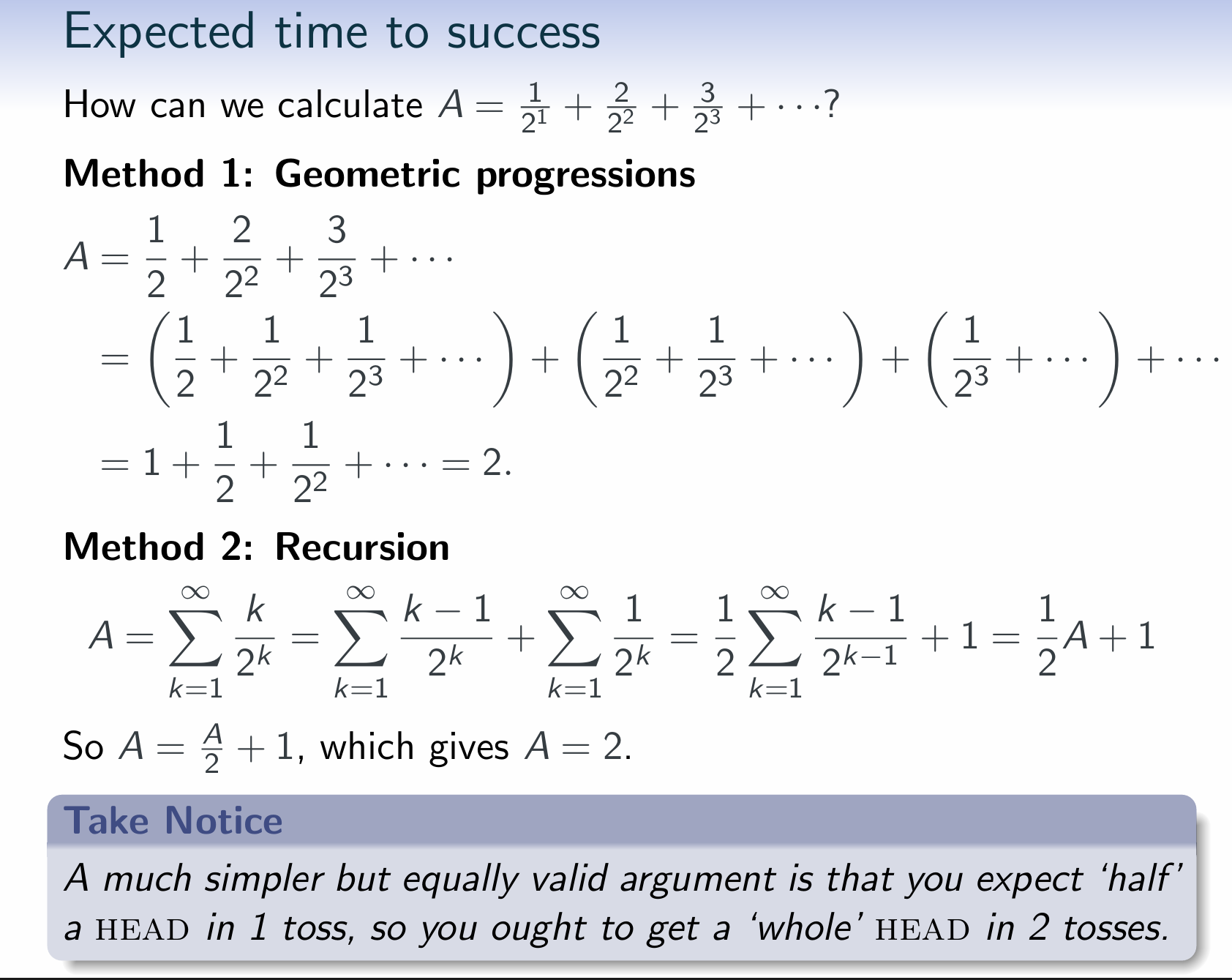

Expected Time to Success

Question. Find the average waiting time for the first head, with no upper bound on the ‘duration’ (one allows for all possible sequences of tosses, regardless of how many times tails occur initially).

Answer. Let Ω be the sample space of all possible sequences of H and T.

Let $X_w : Ω →\mathbb{N}$ such that $X_w(ω)$ is the first location in ω containing an H (i.e. the waiting time for ω).For example $X_w(TTHTH···)=3$

Then the average waiting time is

Expected time to success

Theorem

If the probability of success is p then:

- The expected number of (indep.) trials before 1 success is $\frac{1}{p}$

- The expected number of (indep.) trials before k successes is $\frac{k}{p}$

Exercise 1

Question. Adieisrolled until the first 4 appears. What is the expected waiting time?

Answer

hence E(no. of rolls until first 4) = 6.

Exercise 2

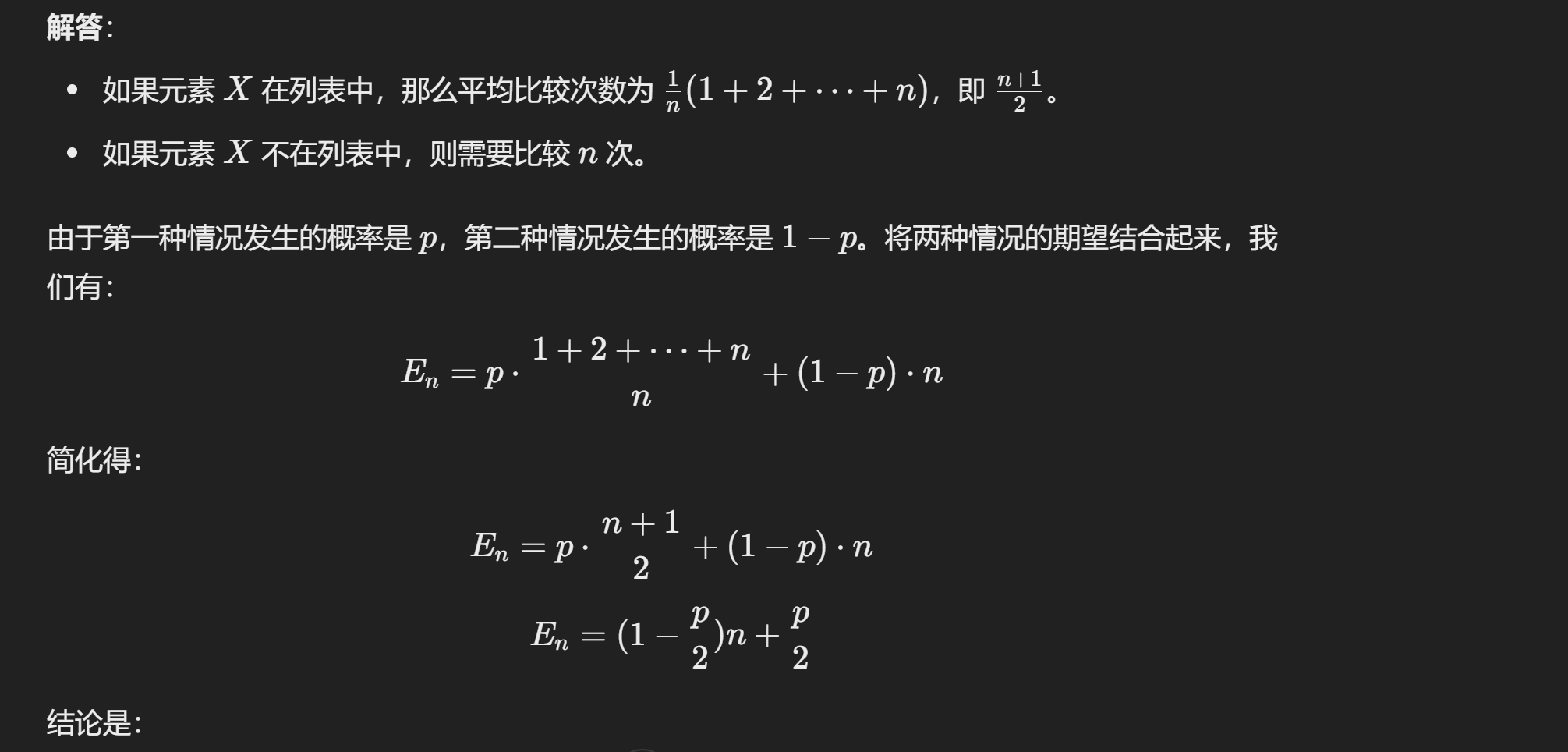

Question. To find an object $X$ in an unsorted list L of n elements, one needs to search linearly through L. Let the probability of X ∈Lbe p. Then there is 1−p likelihood of X being absent altogether. Find the expected number of comparison operations.

Answer. If the element X is in the list, then the number of comparisons averages to $\frac{1}{n}(1+ \dots + n)$. If X is absent, we need n comparisons.

The first case has probability p, the second 1 − p. Combining these we find

As one would expect, increasing p leads to a lower $E_n$.

Success vs Expected value

Question

Does high probability of success lead to a high expected value?

Generally, no.

Example

Buying more tickets in the lottery increases your chances of winning, but the expected value of winnings decreases.

赢35块钱加上格子上的钱。因此策略2的概率p指向(35-24+1)=12$

Roulette (outcomes 0,1,…,36). Win: $35 × bet$

Strategy 1: Bet $1 on a single number

- Probability of winning: $\frac{1}{37}$

- Expected winnings: $\frac{1}{37}.($35)+\frac{36}{37}.(-$1)\approx −2.7c$

Strategy 2: Place $1 bets on 24 numbers, selected from among 0 to 36.

- Probability of winning: $\frac{24}{37} \approx 65\%$

- Expected winnings:

- If one of the numbers comes up, win $35 from the bet on that number and lose $23 from the bets on the remaining numbers,

So expected winnings are:

Gambler’s ruin

Many so-called ’winning systems’ that purport to offer a winning strategy do something akin — they provide a scheme for frequent relatively moderate wins, but at the cost of an occasional very big loss.

It turns out (it is a formal theorem) that there can be no system that converts an ‘unfair’ game into a ’fair’ one. In the language of decision theory, ‘unfair’ denotes a game whose individual bets have negative expectation.

It can be easily checked that any individual bets on roulette, on lottery tickets or on just about any commercially offered game have negative expected value.

Standard Deviation and Variance

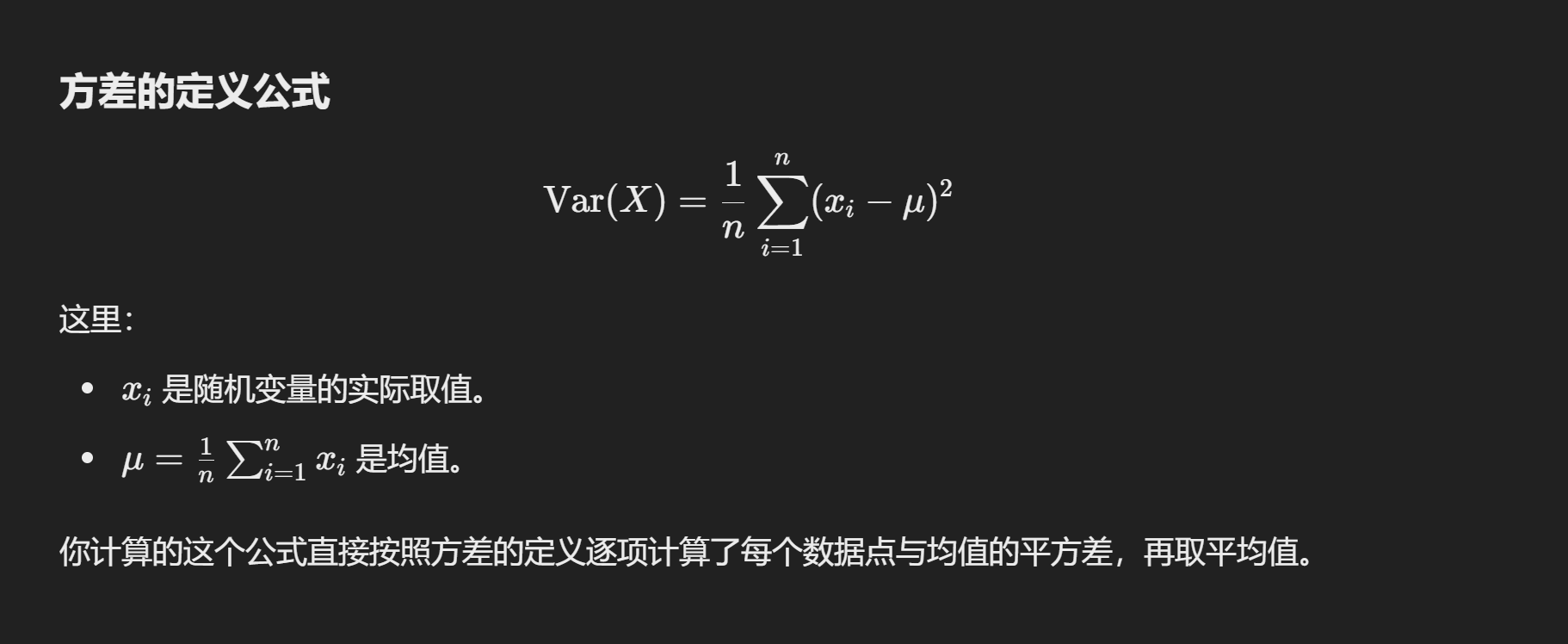

Definition

For random variable X with expected value (or: mean) $µ = E(X)$, the standard deviation of X is

and the variance of X is

Standard deviation and variance measure how spread out the values of a random variable are. The smaller $σ^2$ the more confident we can be that $X(ω)$ is close to $E(X)$, for a randomly selected $ω$.

Take Notice

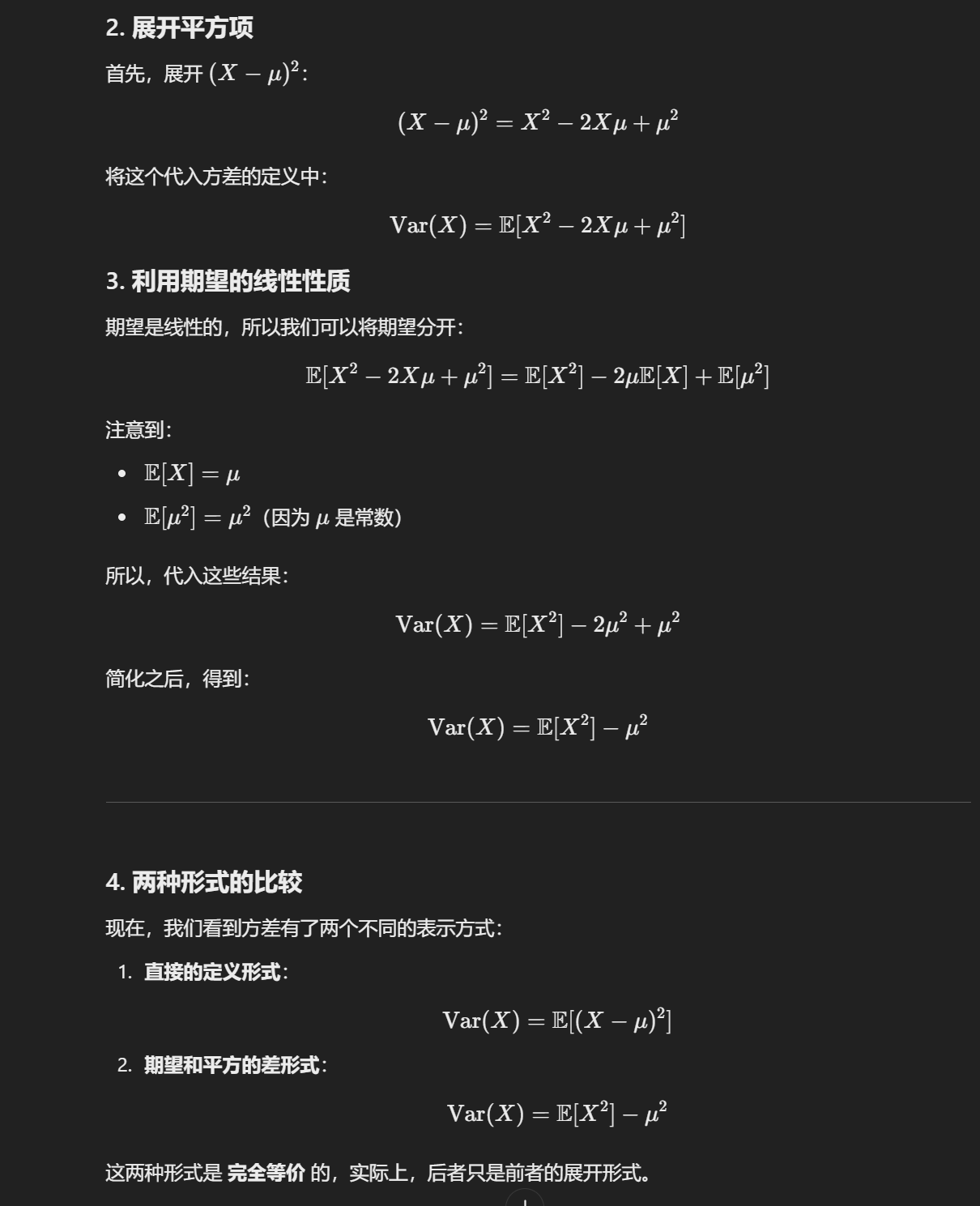

The variance can be calculated as $E((X − µ)^2) = E(X^2)−µ^2$, where $E(X^2) = \sum^{}_{k \in \mathbb{Z}} P(X = k)·k^2$

此处方差计算公式和我在中国学的方差计算公式,本质上是一样的,这里是简化的另一种形式。

example

Question. Let the random variable $X_d \overset{def}{=} value$ of a rolled die. What is(a)the mean, (b)the variance and (c) the standard deviation?

(a)

(b)

Hence $\sigma^2=E(X_d^2)- \mu^2 = \frac{35}{12}$

(c)

exercise

Question (Supp). Two independent experiments are performed.

$p_1$ = P(1st experiment succeeds) = 0.7

$p_2$ = P(2nd experiment succeeds) = 0.2. Random variable X counts the number of successful experiments.

- Expected value of X?

- Probability of exactly one success?

- Probability of at most one success?

- Variance of X?

Answer. For experiment i, let $X_i = 1$ if the experiment is successful and $X_i = 0$ otherwise.

(a) $E(X) = E(X1)+E(X2) = 1·p1 +1·p2 = 0.7+0.2 = 0.9$.

这里问的期望值,一次成功就是1,失败就是0.所以Omega是2,成功和失败是对立事件。因为两个实验是独立,且问的是成功的期望值,所以算和即可,不用考虑乘。成功是0.9,失败的期望值是0.3+0.8=1.1。

(b) $P(X = 1) = P(X1 =1,X2 = 0)+P(X1 =0,X2 = 1) =0.7·0.8 +0.3·0.2 = 0.62$.

(c) $P(X = 1)+P(X1 = X2 =0) =0.62+0.3·0.8 = 0.86$.

(d) $σ^2 = E(X^2)−E(X)^2 = (0.62·1+0.14·4)−0.92 = 0.37$.

成功一次的期望值是1,概率是(c)中结果;成功两次的期望值是2,概率是 0.7*0.2。

Welcome to point out the mistakes and faults!